Limiting the rates ☕ (️revised)

A rate limiter is used to control the rate of traffic send by a client or a service. rate limit refers to preventing the frequency of an operation from exceeding some constraint.

Introduction

I’ve gathered some insightful concepts from the book “System Design Interview,” along with valuable resources on rate-limiting strategies and techniques.

These include “Rate-limiting strategies and techniques” from Google Cloud Architecture, an engaging article called “An alternative approach to rate limiting” on Figma’s blog, and an informative guide on rate limits titled “Rate limits: Standard v1.1 Twitter.”

These materials offer fresh perspectives and practical advice for optimizing system performance while ensuring fair usage. Dive into these captivating resources to discover effective ways to tackle rate-limiting challenges in your designs.

Reasons

- to put in place a defensive measure for services (this is quite essential for large companies)

- protect services from excessive use

- put in place a “fair use policy”

- Prevent resource starvation

- reduce cost

Requirements

- accurate limit excessive request

- low latency, the limiter should not slow down the service

- use little memory

- distributed

- exception handling

- high fault tolerance

Throttling algorithms

Token bucket

- Each request utilizes one token.

- Tokens are replenished at regular intervals of time (every n amount).

- In case there are no tokens available:

- The request can be stored for later handling.

- Alternatively, the request can be dropped.

Variables (Token bucket)

- Bucket size

- Refill rate

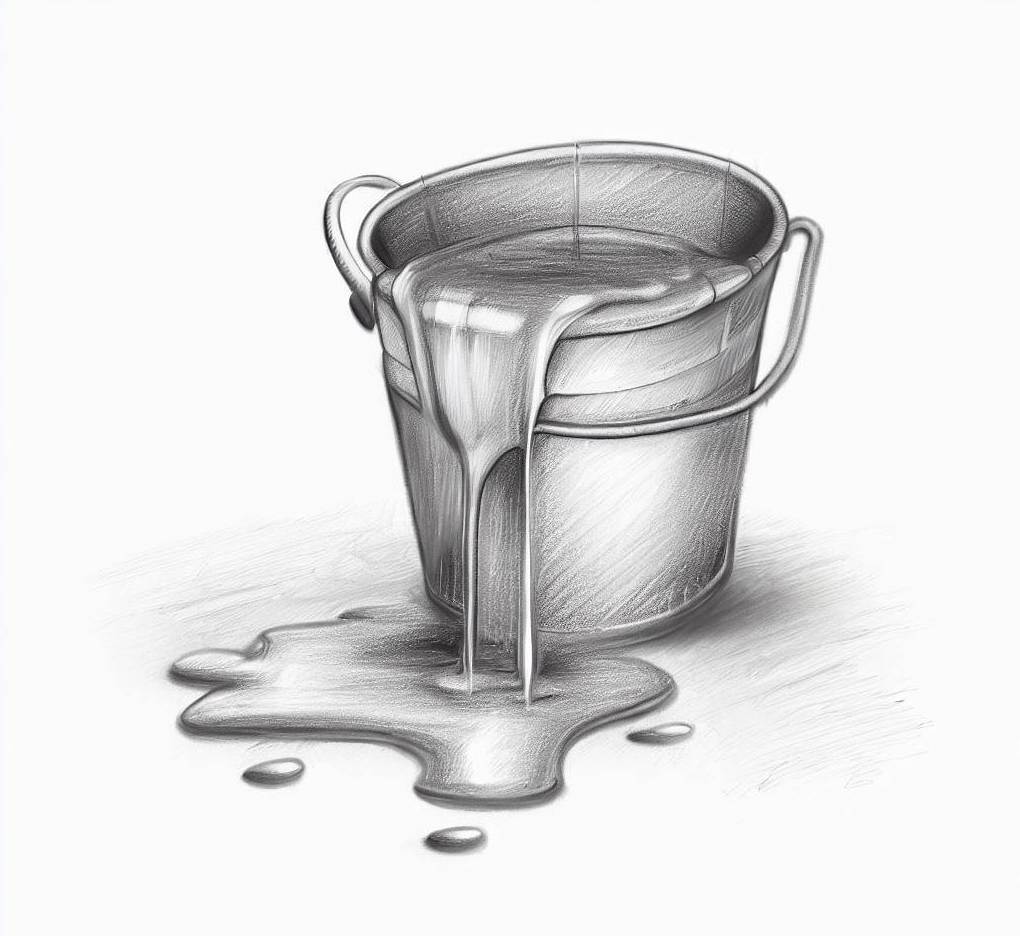

Leaky bucket

This algorithm utilizes a queue (FIFO) to manage requests. If the bucket is already at its maximum capacity, any new requests will be ignored.

The process involves adding new requests to the queue (bucket). If the queue is full, there are two options: the request can either be dropped or saved for later processing.

At regular intervals, requests are pulled from the queue and processed.

Pros (Leaky bucket)

- Memory efficient, limited queue size

- Request are processed at a fixed rate

Cons (Leaky bucket)

- In the event of a burst of traffic, new requests can merge with older requests if the latter have not been processed in a timely manner.

Variables (Leaky bucket)

- The bucket size refers to the maximum capacity of the bucket, indicating the number of requests it can hold at any given time.

- The overflow rate determines the number of requests that can be processed within a fixed rate or time interval. It represents the rate at which the bucket can handle incoming requests without overflowing.

Fixed window counter

- The algorithm partitions the timeline into time windows of fixed size.

- For each request, the counter corresponding to each window is incremented.

- Time edges can be a challenge.

- This can result in the possibility of having twice as many allowed requests.

Pros (fixed window)

- Memory efficient

- Fixed counter per n amount of time can fit some use cases

- Easy to understand

Variables (fixed window)

- Counter per window

- Window time

Sliding window log

- keep track of the timestamps (request)

- when a new request comes in remove all outdated timestamps, those are defined to be older than the current time window

- add timestamp of the new request to the log

- if the log size is bigger than the max allowed drop / save else handle normally

Pros (sliding window log)

- The algorithm is very accurate

Cons (sliding window log)

- Memory hard

Variables (sliding window log)

- Request per minute

Sliding window

Similar to the fixed window approach, this algorithm employs a timer and a counter to manage the maximum number of available requests per user. However, in this case, the timer begins counting only after the first request is made.

This algorithm combines the benefits of both the bucket and fixed window approaches. Unlike the previous methods, the sliding window implementation ensures that newer requests are not starved, and the system is not overwhelmed by bursts of requests.

Pros (sliding window)

-

Smooths out spikes by basing the current rate on the average rate of the previous window.

-

It is memory efficient.

Variables (sliding window)

- Request per minute

- Prev minute

- Prev percentage

- Current minute

- Current time

- Current percentage

References

system design interview Rate-limiting strategies and techniques, An alternative approach to rate limiting & Rate limits: Standard v1.1 twitter